In The Second Machine Age Era, AI, Deep Learning, The Internet of Things & Predictive Analytics Would Be The Key Disruptions for 21st Century Businesses

R2D2, Star Trek's onboard supercomputer HAL and Obi-wan Kenobi of Star Wars are no longer nice-to-view escapisms in a movie theatre or even augmented reality games that are good for triggering flights of fancy. More often than not, variants of these will soon be carried around by many in their shirt pockets. Artificial Intelligence (AI), Neural Networks, the Internet of Things ( IoT ) and Predictive Analytics using zettabytes of data are increasingly going to get mainstream, starting 2015. The second machine age, an age which will witness accelerated technological developments and advancements, is very much upon us.

Already, combining deep learning techniques with what is termed as 'reinforcement learning', software is being written that learns by taking certain actions and getting feedback on its effects, as humans and animals do. Welcome to the world of smart neural networks.

As Artificial Intelligence gets increasingly mainstream, it is time to dispel the dystopian notions some may have about the evil side effects of this domain. Industrial robots or consumer ones, together with smart algorithms and deep-learning systems, are harnessed enablers which will bring about quantum leaps in productivity and efficiency particularly where routine tasks or mammoth projects are concerned. Neural nets are modelled on the basis of biological brains. When one attempts a new task, a certain set of neurons will fire. After observing the results, in subsequent iterations, the brain uses feedback to decide which set of neurons need to get activated. A neural network essentially replicates this process in code. However, instead of trying to duplicate the dazzlingly complex tangle of neurons in the human brain, a neural network, which is far simpler, organizes its neurons neatly into layers.

|

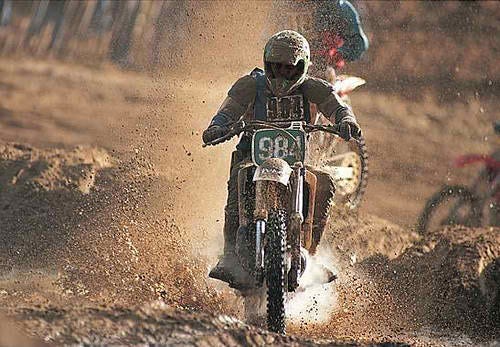

| Caption by Neural Image Caption Generator: ' A person riding a motorcycle on a dirt road.' |

Breakthroughs in this domain will, among other things, transform Search as one sees it today. The 'Smart Search' if one may call it that, will bring to bear an understanding of the real world and blend it with the individual's preferences, knowledge & experiences to often anticipate and come up with the answers to the questions that the user may have in mind. It would also spontaneously surface information that the user may need. In several ways, a 'Smart Search' will become the user's alternate brain. As the amount of data generated and available doubles every few years, normal search will become too defocused, cumbersome and unproductive. Instead of having to sift through mountains of content to extract the relevant information or data, one will have to rely on smart, personalized algorithms to do that job for you and serve up exactly what you could be looking for.

Huge databases are being built which will free human memories of the need to store, organize and process a lot of information which it currently needs to do. Transactive memory techniques, where groups share the task of remembering different things, are being applied to the organization of these databases so that people don't have to scan their own memories for needed information but can scan 'online memories' instead. As more and more people discover that using the Internet as 'prosthetic memory' is both faster and more knowledgeable than using one's brain, knowledge is being increasingly outsourced to the Internet.

Google is currently engaged in building the ultimate transactive memory service, the Knowledge Vault, which is an ambitious, scalable, self-learning, consummate knowledge machine. It is machine-curated, automatically gathers and combines information on the web and uses a system of confidence to determine which of these are likely to be true. By way of an example, till recently it had harvested 1.6 billion facts out of which only 271 million of have more than a 90% chance of being right. Both these numbers will continue to increase indefinitely in the future.

The Internet of Things ( IoT ), a scenario where objects, animals or people are embedded with chips and sensors with unique identifiers which have the ability to transfer data over a network without human-to-human or human-to-computer interaction, dovetails quite well into the scenario mentioned above. The IPv6's huge increase in address space has been a

major facilitator in the rise of the IoT. Estimates are that by 2020, there will be 30 billion devices communicating automatically and wirelessly, in the market. The accompanying spurt in the sheer volume of data is driving many enterprises, which have traditionally always had their own data centres, to outsource data processing to third-party providers. Despite that, the challenge increasingly has been to assimilate all contextual data and to extract relevant data from that. Enterprises which are successfully able to do so are also able to package that as products and services for their customers. These products and services are spread across customer service, maintenance services, transportation and logistics services and a variety of smart home services, among others. Adoption of open systems and cloud-based data analytics will lead to greater integration and introduction of many more value-added smart services, leading to new and alternate business models in some cases.

[ For more details re: IoT, check out the embedded video above.]

AI, IoT & Big Data Analytics are dovetailing in several cases and helping to evolve productivity and efficiency of businesses while driving down costs. In the healthcare industry for example, one of the applications acts as the 'air traffic controller' for hospitals and healthcare systems and helps boost operational efficiency and improve patient care by helping staff make better choices about allocation of existing resources.

Some of the other interesting uses of all this technology has been in the area of intelligible text generation after scanning data from news headlines, sports statistics, company financial reports, sales and housing starts, to mention a few possible areas. The resulting reports which appear to have been written by a human, would serve as a low-cost tool to expand and enrich coverage when editorial resources and budgets are under pressure. Current usages include newspaper chains generating automated summary articles to agencies generating financial analysis reports for their customers. Some media mavens are predicting that during the next decade, a computer program may win the Pulitzer prize for an article that it has composed.

Another domain where these technologies could dovetail and help improve public services, increase operational efficiencies and ultimately enhance the quality of life of its residents would be in the case of innovative, smart cities. Some of the key elements for such cities would be the deployment of a large number of sensors and surveillance cameras, linked by a highly evolved, secure & reliable telecommunications infrastructure and the needed ICT infrastructure which can bring Big Data and predictive analytics capabilities to bear on the huge volume of real-time as well as stored and archived structured and unstructured data, within and across geographies. Some of the desired outcomes would be proactive decision making capabilities, better and smarter choices for both public sector organizations and agencies as well as residents of such a city, thus enhancing growth, economic competitiveness, innovation and quality of life for all concerned.

major facilitator in the rise of the IoT. Estimates are that by 2020, there will be 30 billion devices communicating automatically and wirelessly, in the market. The accompanying spurt in the sheer volume of data is driving many enterprises, which have traditionally always had their own data centres, to outsource data processing to third-party providers. Despite that, the challenge increasingly has been to assimilate all contextual data and to extract relevant data from that. Enterprises which are successfully able to do so are also able to package that as products and services for their customers. These products and services are spread across customer service, maintenance services, transportation and logistics services and a variety of smart home services, among others. Adoption of open systems and cloud-based data analytics will lead to greater integration and introduction of many more value-added smart services, leading to new and alternate business models in some cases.

[ For more details re: IoT, check out the embedded video above.]

AI, IoT & Big Data Analytics are dovetailing in several cases and helping to evolve productivity and efficiency of businesses while driving down costs. In the healthcare industry for example, one of the applications acts as the 'air traffic controller' for hospitals and healthcare systems and helps boost operational efficiency and improve patient care by helping staff make better choices about allocation of existing resources.

Some of the other interesting uses of all this technology has been in the area of intelligible text generation after scanning data from news headlines, sports statistics, company financial reports, sales and housing starts, to mention a few possible areas. The resulting reports which appear to have been written by a human, would serve as a low-cost tool to expand and enrich coverage when editorial resources and budgets are under pressure. Current usages include newspaper chains generating automated summary articles to agencies generating financial analysis reports for their customers. Some media mavens are predicting that during the next decade, a computer program may win the Pulitzer prize for an article that it has composed.

|

| Smart Cities: Turning Data Into Insight |

Comments

Post a Comment